LeRobot

Hugging Face library for real-world robotics

Project Overview

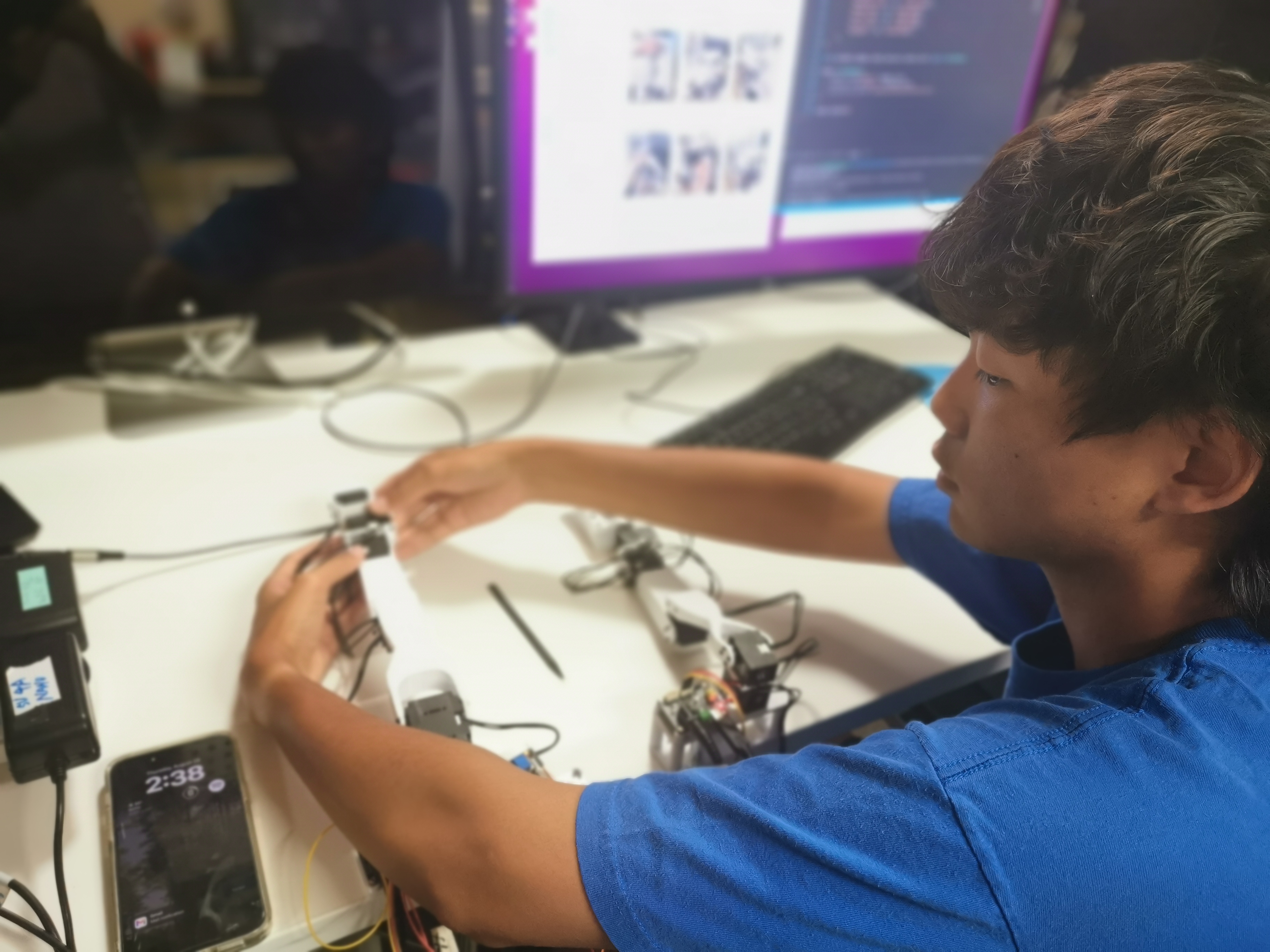

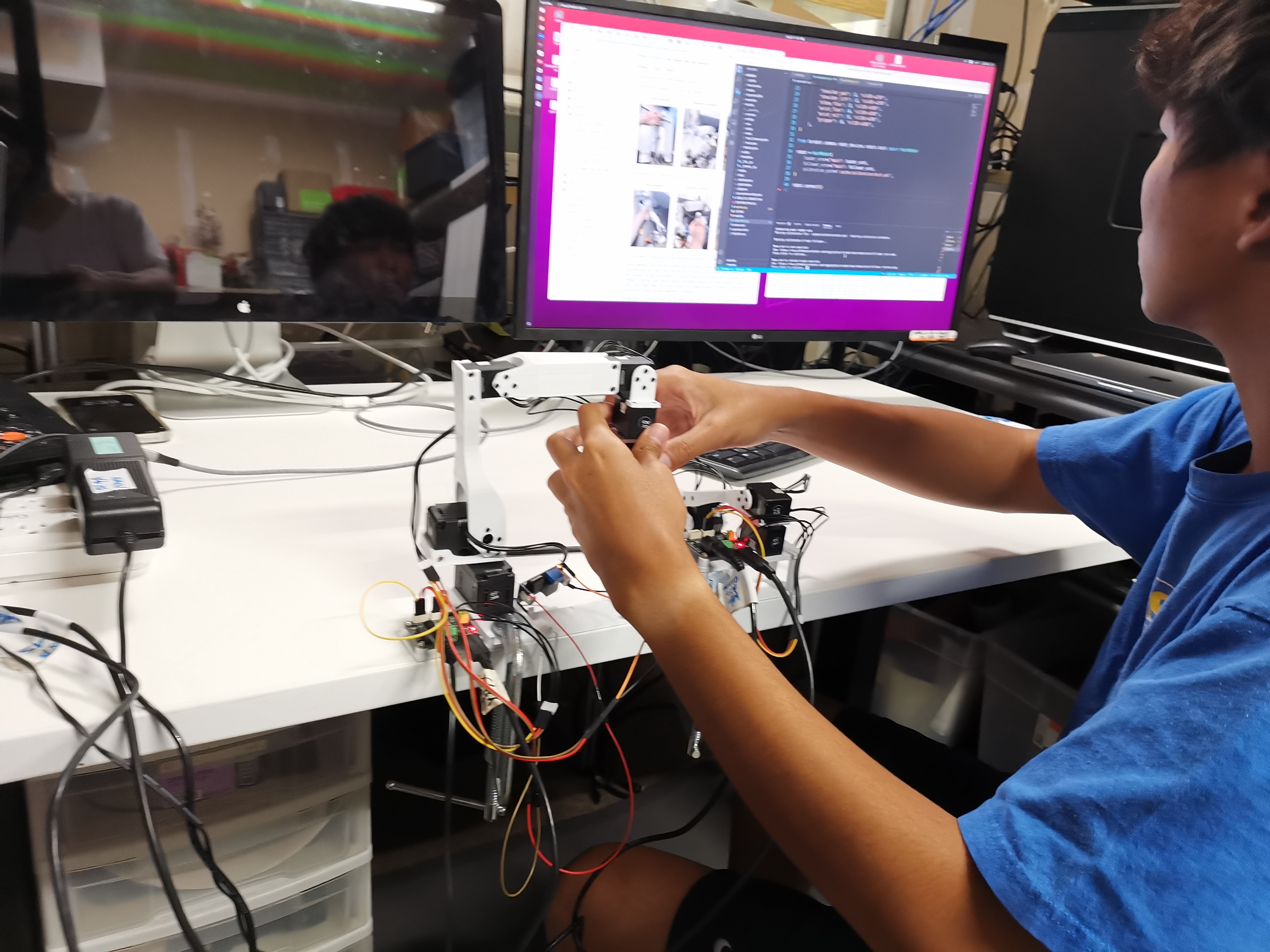

I served as a research intern, delving into robot automation for the pick and place operations of recycled phones within a warehouse setting. Hugging Face’s LeRobot project represents an open - source initiative centered around machine - learning models tailored for real - world robotics applications. Notably, it operates within the realms of imitation and reinforcement learning. This project functions as a wrapper for the Stanford paper ALOHA. Stanford’s ALOHA encompasses a suite of robotics systems. Its core objective is to develop affordable, open - source hardware dedicated to robotics research. Initially, ALOHA began as a bimanual teleoperation system. However, it has since progressed into Mobile ALOHA, a system that seamlessly integrates mobility with advanced whole - body manipulation capabilities. Mobile ALOHA is engineered to execute intricate real - world tasks such as cooking, cleaning, and navigating diverse environments. As a result, it has proven invaluable for research in fields like imitation learning and human - robot interaction. The key deliverable of this project is the successful implementation of object pick - and - place functionality.

Implementation

LeRobot Test Process

Setup

- Hardware:

- Data Collection & Inference: Linux laptop with NVIDIA RTX 4070 GPU.

- Robot Arms: Custom-built based on KOCH 1.1.

Methodology

- Data Collection:

- Performed 100 rounds of task demonstrations.

- Sensor data: RGB, joint states, and action trajectories recorded.

conda activate lerobot python lerobot/scripts/control_robot.py record_dataset --fps 30 --root data --repo-id bobding/koch_test --num-episodes 100 --run-compute-stats 1 --warmup-time-s 2 --episode-time-s 200 --reset-time-s 10

- Training:

- Trained using ACT model.

- Training Parameters

| Hyperparameter | Behavioral Cloning (BC) | Reinforcement Learning (RL) | Notes |

|---|---|---|---|

| Batch Size | 64-256 |

256-512 |

Larger batches for RL stability |

| Learning Rate | 3e-4 (Adam) |

1e-3 to 1e-4 |

Lower for fine-tuning |

| Training Epochs | 50-200 |

500-1k+ |

RL requires more iterations |

| Gamma (γ) | - | 0.99 |

RL discount factor |

| τ (Polyak) | - | 0.005 |

Target network update rate |

DATA_DIR=data python lerobot/scripts/train.py policy=act_koch_real env=koch_real dataset_repo_id=bobding/koch_test hydra.run.dir=outputs/train/act_koch_real

- Inference:

- Deployed the trained policy on the same hardware for real-world testing.

Results

- Success Rate: Achieved 80% on pick and place objects

- Key Observations:

- Action is smoother if using two cameras.

- Material-specific failures (soft/transparent objects).

Challenges & Improvements

- Limitations:

- Data diversity bottleneck

- Future Work:

- Expand dataset with adversarial examples.