Universal Manipulation Interface

In-The-Wild Robot Teaching Without In-The-Wild Robots

Project Overview

I worked as a research intern, exploring robot automation for the pick and place of recycled phones in a warehouse. The Stanford project page is https://umi-gripper.github.io

Here is my understanding how it works

● Firstly, the goal is to determine the correct and intuitive physical interface for human demonstration while being able to capture all the information required for rule learning by usinig a fisheye lens to increase the field of view and the visual environment, and adding side mirrors to the gripper to provide implicit observation. When combined with the built-in IMU sensor of the GoPro, robust tracking can be achieved under fast movements. ● Secondly, making correct policy interface (i.e., observation and action representation), which can make the policy independent of the hardware, thus enabling effective skill transfer. Specifically, the researchers adopt inference time delay matching to handle different sensor observations and execution delays, use relative trajectories as the action representation, and finally apply diffusion rules to model the multimodal action distribution.

The UMI system provides an easy-to-use and accessible framework to unlock new robotic manipulation skills, allowing us to demonstrate any action in any environment while maintaining reliable high transferability from human demonstrations. By installing a wrist camera on the handheld gripper, it can be proven that UMI is capable of generating data for various tasks involving two-handed operation by simply changing the training method.

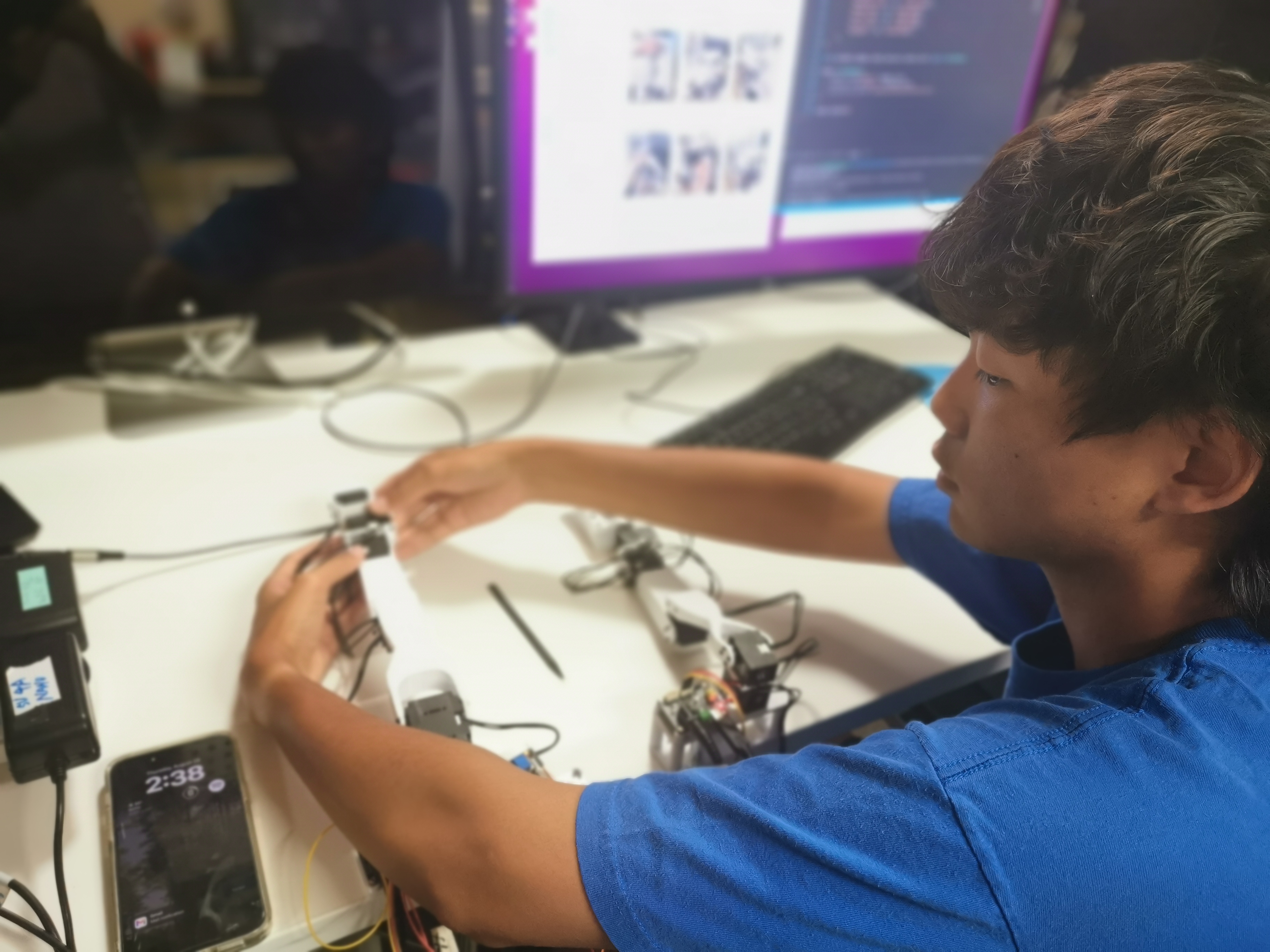

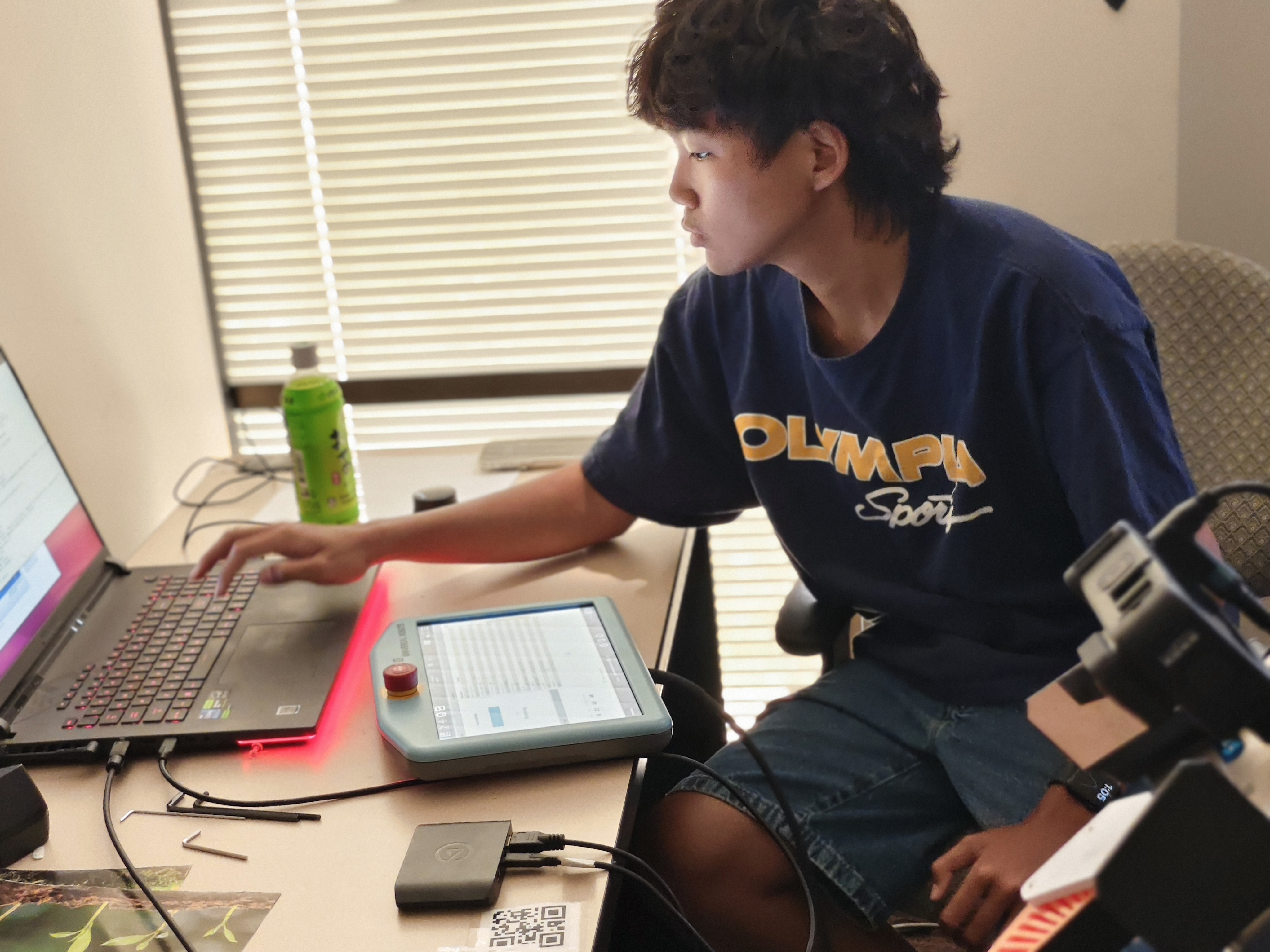

Implementation

I created gripper parts from my home 3D printer CR10-V3. The WSG50 gripper for UR5 was purchased from ebay. The data was collected from a laptop with 4070 GPU then copied to a RTX A6000 PC to train a model. Once the model is created it was copied back to this laptop which runs the inference.

Robotic Grasping Improvement Process

1. Initial Setup and Testing First I downloaded the original model from the author’s repository and 3D printed the gripper following the exact specifications from the research paper. I collected 50 grasp attempts using the recommended settings:

- Gripper force: 15N

- Approach speed: 0.1m/s

- Object: 8cm diameter cup with different colors

2. Initial Challenges The initial tests showed unsuccessful grasp actions due to:

- Limited training data (only 50 samples)

- Suboptimal gripper positioning

- Lack of environmental variations

Multiple test runs confirmed these issues:

3. System Improvements To solve these problems, I made several adjustments:

- Expanded training dataset to 200 samples

- Added environmental variations (different surfaces/positions)

- Modified gripper parameters (increased force to 20N, adjusted approach angle)

4. Successful Implementation After retraining with the enhanced dataset:

- Achieved reliable cup grasping

- Demonstrated successful cup rotation

- Maintained consistency across positions

5. Final Validation Tested with various conditions:

- 10 different cup positions

- Multiple approach angles

- Multiple light conditions

- Different surface materials

Final tests showed 90% success rate: